Decision Tree-algoritme

Opslaan, invullen, afdrukken, klaar!

De beste manier om een Decision Tree-algoritme te maken? Check direct dit professionele Decision Tree-algoritme template!

Beschikbare bestandsformaten:

.pdf- Gevalideerd door een professional

- 100% aanpasbaar

- Taal: English

- Digitale download (560.19 kB)

- Na betaling ontvangt u direct de download link

- We raden aan dit bestand op uw computer te downloaden.

Zakelijk gegevens Boom Beslissingsboom Eenvoudige beslissingsboom Besluit Attribuut

How to draft a Decision Tree Algorithm? An easy way to start completing your document is to download this Decision Tree Algorithm template now!

Every day brings new projects, emails, documents, and task lists, and often it is not that different from the work you have done before. Many of our day-to-day tasks are similar to something we have done before. Don't reinvent the wheel every time you start to work on something new!

Instead, we provide this standardized Decision Tree Algorithm template with text and formatting as a starting point to help professionalize the way you are working. Our private, business and legal document templates are regularly screened by professionals. If time or quality is of the essence, this ready-made template can help you to save time and to focus on the topics that really matter!

Using this document template guarantees you will save time, cost and efforts! It comes in Microsoft Office format, is ready to be tailored to your personal needs. Completing your document has never been easier!

Download this Decision Tree Algorithm template now for your own benefit!

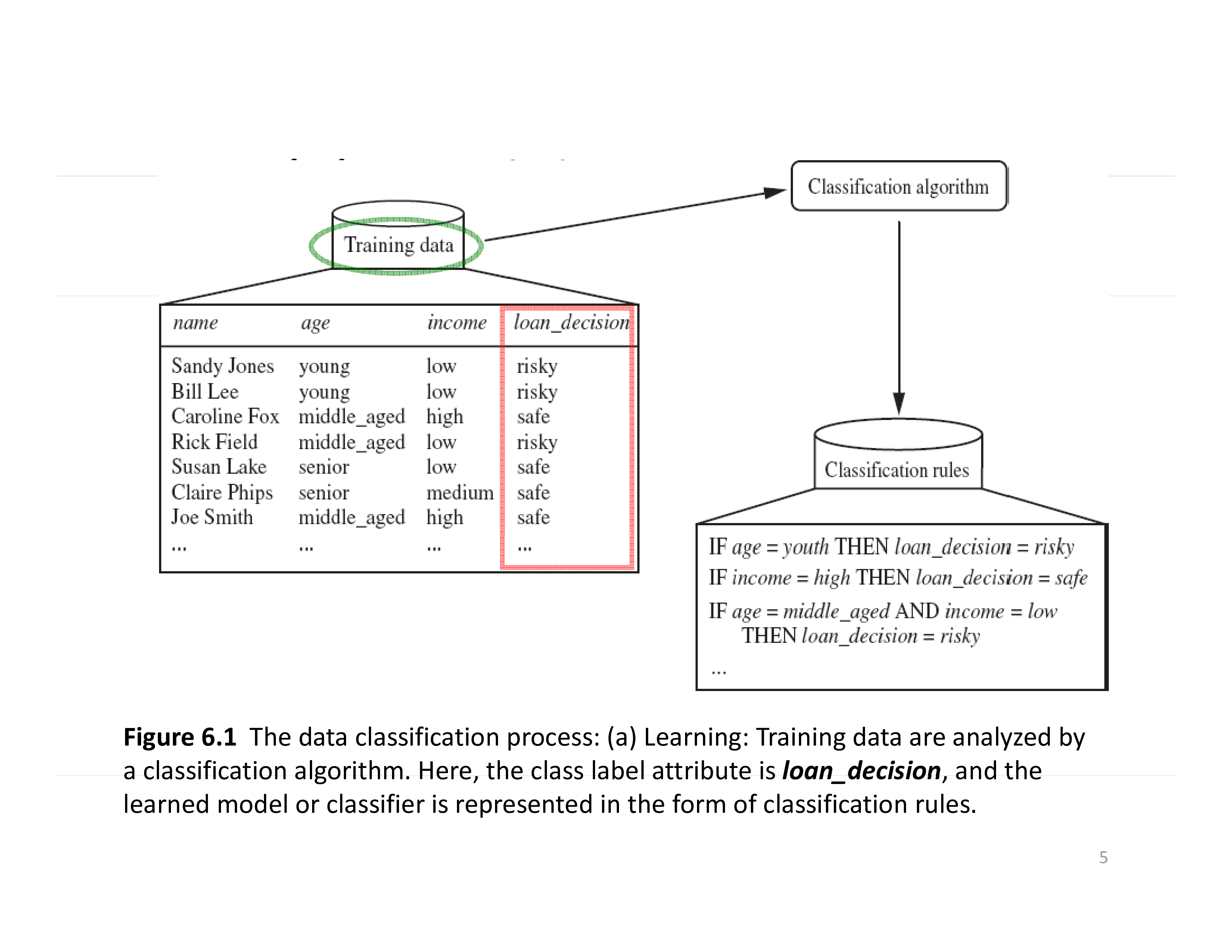

database 17 • Class P: buyscomputer = “yes” • Class N: buyscomputer buys computer = “no” no 9 9 5 5 Entropy ( D ) = − log2 ( ) − log2 ( ) =0.940 14 14 14 14 • Compute the expected information requirement for each attribute: start with the attribute age Gain( age, D ) = Entropy ( D ) − Sv Entropy ( Sv ) ∑ v∈ Youth , Middle − aged , Senior S = Entropy ( D ) − 5 4 5 Entropy ( Syouth ) − Entropy ( Smiddle aged ) − Entropy ( Ssenior ) 14 14 14 = 0.246 Gain (income, D ) = 0.029 Gain ( student , D ) = 0.151 Gain ( credit rating , D ) = 0.048 18 Figure 6.5 The attribute age has the highest information gain and therefore becomes the splitting attribute at the root node of the decision tree..

DISCLAIMER

Hoewel all content met de grootste zorg is gecreërd, kan niets op deze pagina direct worden aangenomen als juridisch advies, noch is er een advocaat-client relatie van toepassing.

Laat een antwoord achter. Als u nog vragen of opmerkingen hebt, kunt u deze hieronder plaatsen.